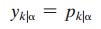

In this problem, we explore the use of the Kullback-Leibler divergence (KLD) to derive a supervised-learning algorithm for multilayer perceptrons (Hopfield, 1987; Baum and Wilczek, 1988). To be specific, consider a multilayer perceptron consisting of an input layer, a hidden layer, and an output layer. Given a case or example α presented to the input, the output of neuron k in the output layer is assigned the probabilistic interpretation

Correspondingly, let qk|α denote the actual (true) value of the conditional probability that the proposition k is true, given the input case α.The KLD for the multilayer perceptron is defined by

![]()